Using PyCOMPSs as the Wrapper Engine

Hello, @dbeltran, @bdepaula, @mcastril, and @macosta:

I am looking into ways of optimizing the wrapper engine, that is the execution of the tasks within the same package, and one of the options is to use COMPSs runtime, throught the library PyCOMPSs. There are plenty of features where we can see benefits of using:

- Abstract away from the memory. Tasks within COMPSs see the whole allocated memory in across all nodes as a single pool. The Runtime takes care of the data locality and minimizing the data movement

- We could set a fixed amount of resources, and the Runtime will take care of executing tasks whenever it is possible. (e.g. two tasks need a full node. Instead of asking for 2 nodes to run them in parallel, we could ask for a single one and the Runtime takes care of executing the tasks whenever the node is free)

- We can define tasks as being binary, hence we don't need to change the underlying code of the scripts/models. Daniele has confirmed us that COMPSs doesn't really care what is being run.

Applicability

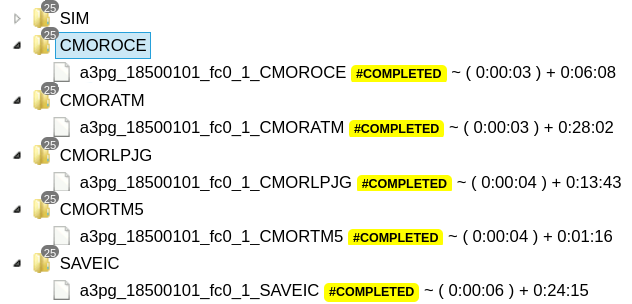

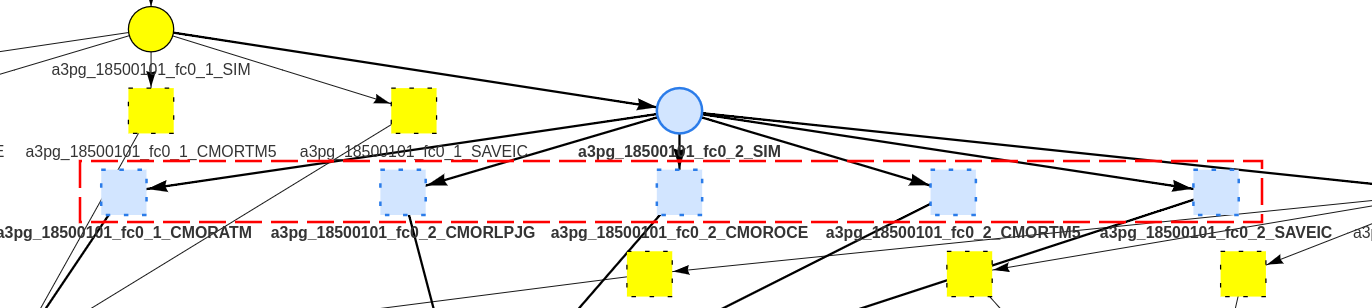

We had a meeting with Daniele Lezzi, from Computer Science's Workflows group, where we layed down the example of Auto-ECEarth3. In this workflow, we have one post-process job per submodel (ocean, ifs, lpjm, etc) and each of them have different computing needs (check the runtime in the tree view). A first proof of concept would be to run all of these tasks on a single wrapper, like in the graph view below.

The challenges

I am concerned about the portability of the tool. Daniele told us that is possible to run COMPSs runtime in a containerized way, and even (as far as I understand) from outside of remote, if the platform does allow for node connectivity with the outside world. For MareNostrum, they have everything setup to be easy executing using their launcher from Remote. Although, in our case, that would mean to abdicate Autosubmit's allocation of SLURM via Sbatch. That is something that, as of now, is not beneficial for Autosubmit.

Also, we want to make it generic, in the sense that Autosubmit creates the PyCOMPSs workflow at the request of the user, and that seems a pretty substantial problem.

Miguel said that Javier Conejero tried to do a copy of one of our Workflows (was it auto-ecearth3?) in PyCOMPSs. But that got eventually stopped.

Next Steps

-

I plan to test PyCOMPSs with a couple of simple tests to understand it.

-

Then, if it is proven beneficial to follow with tool, I will try to abstract away and make it generic.